diff --git a/.gitignore b/.gitignore

index f6e46e88..02937ce9 100644

--- a/.gitignore

+++ b/.gitignore

@@ -32,3 +32,9 @@ junit-reports/

# VSCode files

.vscode/

+

+terraform-kickstarter/.terraform.lock.hcl

+

+terraform-kickstarter/.terraform/providers/registry.terraform.io/hashicorp/aws/3.56.0/darwin_amd64/terraform-provider-aws_v3.56.0_x5

+

+terraform-kickstarter/terraform.tfstate

diff --git a/LICENSE b/LICENSE

index b9b7f40f..0903645a 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,2 +1,201 @@

-Apache License 2.0 as specified in each file. You may obtain a copy of the License at LICENSE-APACHE-2.0 and

-http://www.apache.org/licenses/LICENSE-2.0

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+Copyright 2018 Netflix, Inc.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

\ No newline at end of file

diff --git a/LICENSE-APACHE-2.0 b/LICENSE-APACHE-2.0

deleted file mode 100644

index 46de5eb1..00000000

--- a/LICENSE-APACHE-2.0

+++ /dev/null

@@ -1,201 +0,0 @@

-Apache License

-Version 2.0, January 2004

-http://www.apache.org/licenses/

-

-TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

-

-1. Definitions.

-

-"License" shall mean the terms and conditions for use, reproduction,

-and distribution as defined by Sections 1 through 9 of this document.

-

-"Licensor" shall mean the copyright owner or entity authorized by

-the copyright owner that is granting the License.

-

-"Legal Entity" shall mean the union of the acting entity and all

-other entities that control, are controlled by, or are under common

-control with that entity. For the purposes of this definition,

-"control" means (i) the power, direct or indirect, to cause the

-direction or management of such entity, whether by contract or

-otherwise, or (ii) ownership of fifty percent (50%) or more of the

-outstanding shares, or (iii) beneficial ownership of such entity.

-

-"You" (or "Your") shall mean an individual or Legal Entity

-exercising permissions granted by this License.

-

-"Source" form shall mean the preferred form for making modifications,

-including but not limited to software source code, documentation

-source, and configuration files.

-

-"Object" form shall mean any form resulting from mechanical

-transformation or translation of a Source form, including but

-not limited to compiled object code, generated documentation,

-and conversions to other media types.

-

-"Work" shall mean the work of authorship, whether in Source or

-Object form, made available under the License, as indicated by a

-copyright notice that is included in or attached to the work

-(an example is provided in the Appendix below).

-

-"Derivative Works" shall mean any work, whether in Source or Object

-form, that is based on (or derived from) the Work and for which the

-editorial revisions, annotations, elaborations, or other modifications

-represent, as a whole, an original work of authorship. For the purposes

-of this License, Derivative Works shall not include works that remain

-separable from, or merely link (or bind by name) to the interfaces of,

-the Work and Derivative Works thereof.

-

-"Contribution" shall mean any work of authorship, including

-the original version of the Work and any modifications or additions

-to that Work or Derivative Works thereof, that is intentionally

-submitted to Licensor for inclusion in the Work by the copyright owner

-or by an individual or Legal Entity authorized to submit on behalf of

-the copyright owner. For the purposes of this definition, "submitted"

-means any form of electronic, verbal, or written communication sent

-to the Licensor or its representatives, including but not limited to

-communication on electronic mailing lists, source code control systems,

-and issue tracking systems that are managed by, or on behalf of, the

-Licensor for the purpose of discussing and improving the Work, but

-excluding communication that is conspicuously marked or otherwise

-designated in writing by the copyright owner as "Not a Contribution."

-

-"Contributor" shall mean Licensor and any individual or Legal Entity

-on behalf of whom a Contribution has been received by Licensor and

-subsequently incorporated within the Work.

-

-2. Grant of Copyright License. Subject to the terms and conditions of

-this License, each Contributor hereby grants to You a perpetual,

-worldwide, non-exclusive, no-charge, royalty-free, irrevocable

-copyright license to reproduce, prepare Derivative Works of,

-publicly display, publicly perform, sublicense, and distribute the

-Work and such Derivative Works in Source or Object form.

-

-3. Grant of Patent License. Subject to the terms and conditions of

-this License, each Contributor hereby grants to You a perpetual,

-worldwide, non-exclusive, no-charge, royalty-free, irrevocable

-(except as stated in this section) patent license to make, have made,

-use, offer to sell, sell, import, and otherwise transfer the Work,

-where such license applies only to those patent claims licensable

-by such Contributor that are necessarily infringed by their

-Contribution(s) alone or by combination of their Contribution(s)

-with the Work to which such Contribution(s) was submitted. If You

-institute patent litigation against any entity (including a

-cross-claim or counterclaim in a lawsuit) alleging that the Work

-or a Contribution incorporated within the Work constitutes direct

-or contributory patent infringement, then any patent licenses

-granted to You under this License for that Work shall terminate

-as of the date such litigation is filed.

-

-4. Redistribution. You may reproduce and distribute copies of the

-Work or Derivative Works thereof in any medium, with or without

-modifications, and in Source or Object form, provided that You

-meet the following conditions:

-

-(a) You must give any other recipients of the Work or

-Derivative Works a copy of this License; and

-

-(b) You must cause any modified files to carry prominent notices

-stating that You changed the files; and

-

-(c) You must retain, in the Source form of any Derivative Works

-that You distribute, all copyright, patent, trademark, and

-attribution notices from the Source form of the Work,

-excluding those notices that do not pertain to any part of

-the Derivative Works; and

-

-(d) If the Work includes a "NOTICE" text file as part of its

-distribution, then any Derivative Works that You distribute must

-include a readable copy of the attribution notices contained

-within such NOTICE file, excluding those notices that do not

-pertain to any part of the Derivative Works, in at least one

-of the following places: within a NOTICE text file distributed

-as part of the Derivative Works; within the Source form or

-documentation, if provided along with the Derivative Works; or,

-within a display generated by the Derivative Works, if and

-wherever such third-party notices normally appear. The contents

-of the NOTICE file are for informational purposes only and

-do not modify the License. You may add Your own attribution

-notices within Derivative Works that You distribute, alongside

-or as an addendum to the NOTICE text from the Work, provided

-that such additional attribution notices cannot be construed

-as modifying the License.

-

-You may add Your own copyright statement to Your modifications and

-may provide additional or different license terms and conditions

-for use, reproduction, or distribution of Your modifications, or

-for any such Derivative Works as a whole, provided Your use,

-reproduction, and distribution of the Work otherwise complies with

-the conditions stated in this License.

-

-5. Submission of Contributions. Unless You explicitly state otherwise,

-any Contribution intentionally submitted for inclusion in the Work

-by You to the Licensor shall be under the terms and conditions of

-this License, without any additional terms or conditions.

-Notwithstanding the above, nothing herein shall supersede or modify

-the terms of any separate license agreement you may have executed

-with Licensor regarding such Contributions.

-

-6. Trademarks. This License does not grant permission to use the trade

-names, trademarks, service marks, or product names of the Licensor,

-except as required for reasonable and customary use in describing the

-origin of the Work and reproducing the content of the NOTICE file.

-

-7. Disclaimer of Warranty. Unless required by applicable law or

-agreed to in writing, Licensor provides the Work (and each

-Contributor provides its Contributions) on an "AS IS" BASIS,

-WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

-implied, including, without limitation, any warranties or conditions

-of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

-PARTICULAR PURPOSE. You are solely responsible for determining the

-appropriateness of using or redistributing the Work and assume any

-risks associated with Your exercise of permissions under this License.

-

-8. Limitation of Liability. In no event and under no legal theory,

-whether in tort (including negligence), contract, or otherwise,

-unless required by applicable law (such as deliberate and grossly

-negligent acts) or agreed to in writing, shall any Contributor be

-liable to You for damages, including any direct, indirect, special,

-incidental, or consequential damages of any character arising as a

-result of this License or out of the use or inability to use the

-Work (including but not limited to damages for loss of goodwill,

-work stoppage, computer failure or malfunction, or any and all

-other commercial damages or losses), even if such Contributor

-has been advised of the possibility of such damages.

-

-9. Accepting Warranty or Additional Liability. While redistributing

-the Work or Derivative Works thereof, You may choose to offer,

-and charge a fee for, acceptance of support, warranty, indemnity,

-or other liability obligations and/or rights consistent with this

-License. However, in accepting such obligations, You may act only

-on Your own behalf and on Your sole responsibility, not on behalf

-of any other Contributor, and only if You agree to indemnify,

-defend, and hold each Contributor harmless for any liability

-incurred by, or claims asserted against, such Contributor by reason

-of your accepting any such warranty or additional liability.

-

-END OF TERMS AND CONDITIONS

-

-APPENDIX: How to apply the Apache License to your work.

-

-To apply the Apache License to your work, attach the following

-boilerplate notice, with the fields enclosed by brackets "[]"

-replaced with your own identifying information. (Don't include

-the brackets!) The text should be enclosed in the appropriate

-comment syntax for the file format. We also recommend that a

-file or class name and description of purpose be included on the

-same "printed page" as the copyright notice for easier

-identification within third-party archives.

-

-Copyright 2021 Toni de la Fuente

-

-Licensed under the Apache License, Version 2.0 (the "License");

-you may not use this file except in compliance with the License.

-You may obtain a copy of the License at

-

-http://www.apache.org/licenses/LICENSE-2.0

-

-Unless required by applicable law or agreed to in writing, software

-distributed under the License is distributed on an "AS IS" BASIS,

-WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-See the License for the specific language governing permissions and

-limitations under the License.

diff --git a/Pipfile b/Pipfile

index fbd6dce0..ae5a1ba9 100644

--- a/Pipfile

+++ b/Pipfile

@@ -7,7 +7,7 @@ verify_ssl = true

[packages]

boto3 = ">=1.9.188"

-detect-secrets = ">=0.12.4"

+detect-secrets = "==1.0.3"

[requires]

python_version = "3.7"

diff --git a/README.md b/README.md

index 727b7242..5cb8afde 100644

--- a/README.md

+++ b/README.md

@@ -4,6 +4,11 @@

# Prowler - AWS Security Tool

+[](https://discord.gg/UjSMCVnxSB)

+[](https://hub.docker.com/r/toniblyx/prowler)

+[](https://gallery.ecr.aws/o4g1s5r6/prowler)

+

+

## Table of Contents

- [Description](#description)

@@ -39,7 +44,7 @@ Read more about [CIS Amazon Web Services Foundations Benchmark v1.2.0 - 05-23-20

## Features

-+180 checks covering security best practices across all AWS regions and most of AWS services and related to the next groups:

++200 checks covering security best practices across all AWS regions and most of AWS services and related to the next groups:

- Identity and Access Management [group1]

- Logging [group2]

@@ -56,6 +61,7 @@ Read more about [CIS Amazon Web Services Foundations Benchmark v1.2.0 - 05-23-20

- Internet exposed resources

- EKS-CIS

- Also includes PCI-DSS, ISO-27001, FFIEC, SOC2, ENS (Esquema Nacional de Seguridad of Spain).

+- AWS FTR [FTR] Read more [here](#aws-ftr-checks)

With Prowler you can:

@@ -78,17 +84,26 @@ Prowler has been written in bash using AWS-CLI and it works in Linux and OSX.

- Make sure the latest version of AWS-CLI is installed on your workstation (it works with either v1 or v2), and other components needed, with Python pip already installed:

```sh

- pip install awscli detect-secrets

+ pip install awscli

```

- AWS-CLI can be also installed it using "brew", "apt", "yum" or manually from , but `detect-secrets` has to be installed using `pip`. You will need to install `jq` to get the most from Prowler.

+ > NOTE: detect-secrets Yelp version is no longer supported the one from IBM is mantained now. Use the one mentioned below or the specific Yelp version 1.0.3 to make sure it works as expected (`pip install detect-secrets==1.0.3`):

+ ```sh

+ pip install "git+https://github.com/ibm/detect-secrets.git@master#egg=detect-secrets"

+ ```

-- Make sure jq is installed (example below with "apt" but use a valid package manager for your OS):

+ AWS-CLI can be also installed it using "brew", "apt", "yum" or manually from , but `detect-secrets` has to be installed using `pip` or `pip3`. You will need to install `jq` to get the most from Prowler.

+

+- Make sure jq is installed: examples below with "apt" for Debian alike and "yum" for RedHat alike distros (like Amazon Linux):

```sh

sudo apt install jq

```

+ ```sh

+ sudo yum install jq

+ ```

+

- Previous steps, from your workstation:

```sh

@@ -187,23 +202,27 @@ Prowler has been written in bash using AWS-CLI and it works in Linux and OSX.

### Regions

-By default Prowler scans all opt-in regions available, that might take a long execution time depending on the number of resources and regions used. Same applies for GovCloud or China regions. See below Advance usage for examples.

+By default, Prowler scans all opt-in regions available, that might take a long execution time depending on the number of resources and regions used. Same applies for GovCloud or China regions. See below Advance usage for examples.

-Prowler has to parameters related to regions: `-r` that is used query AWS services API endpoints (it uses `us-east-1` by default and required for GovCloud or China) and the option `-f` that is to filter those regions you only want to scan. For example if you want to scan Dublin only use `-f eu-west-1` and if you want to scan Dublin and Ohio `-f 'eu-west-1 us-east-s'`, note the single quotes and space between regions.

+Prowler has two parameters related to regions: `-r` that is used query AWS services API endpoints (it uses `us-east-1` by default and required for GovCloud or China) and the option `-f` that is to filter those regions you only want to scan. For example if you want to scan Dublin only use `-f eu-west-1` and if you want to scan Dublin and Ohio `-f 'eu-west-1 us-east-s'`, note the single quotes and space between regions.

## Screenshots

-- Sample screenshot of report first lines:

+- Sample screenshot of default console report first lines of command `./prowler`:

-  +

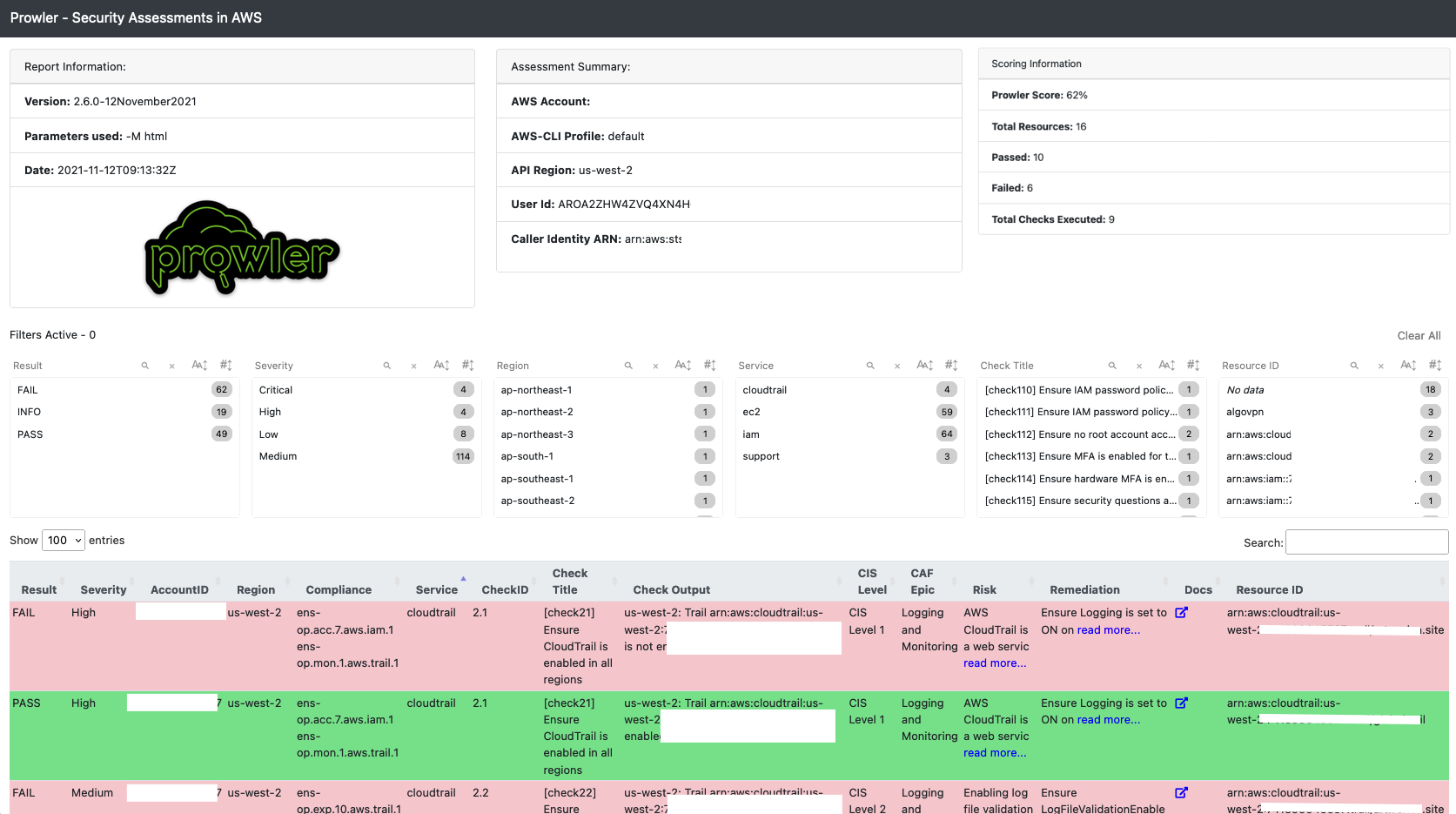

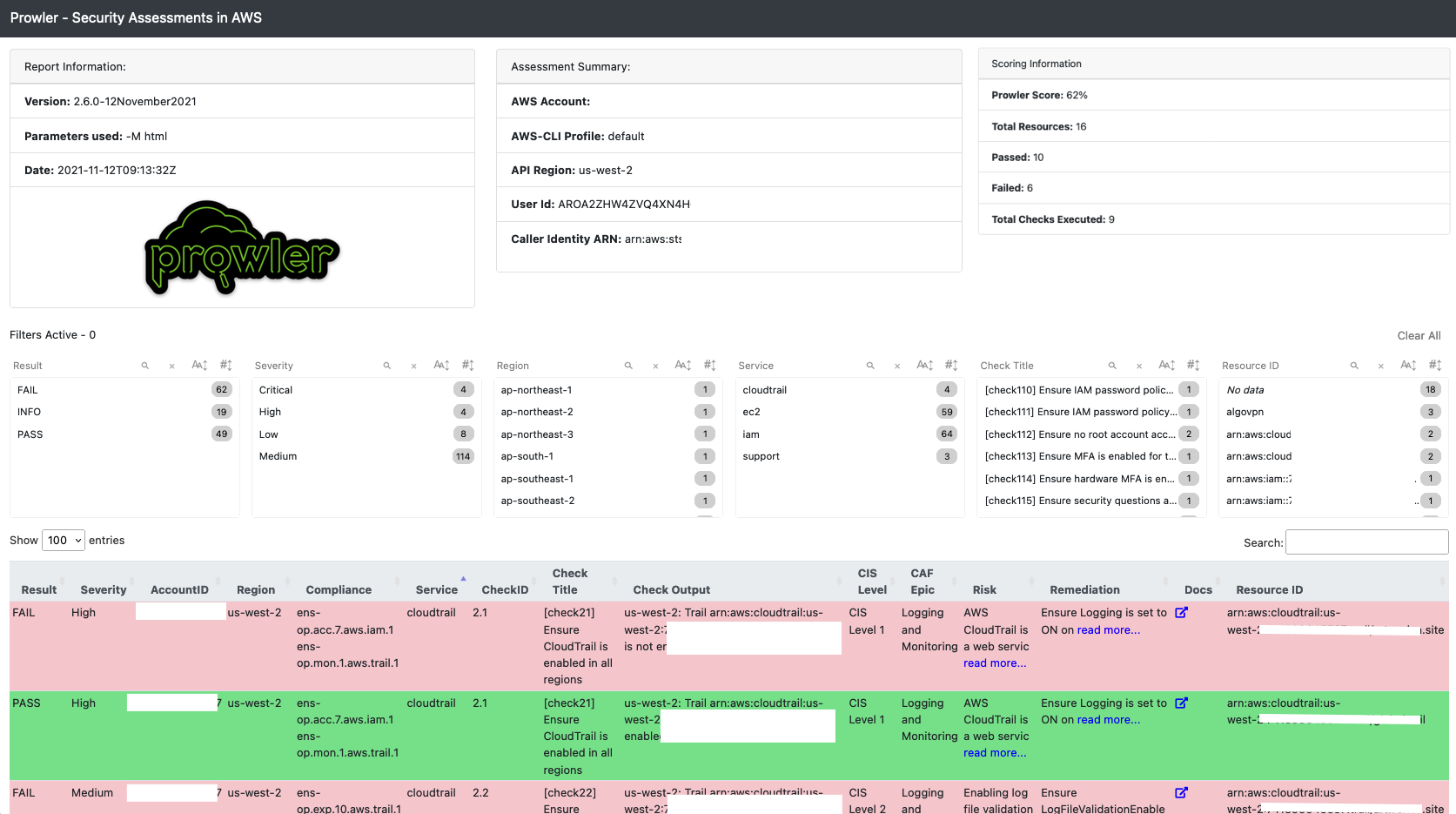

+  - Sample screenshot of the html output `-M html`:

-

- Sample screenshot of the html output `-M html`:

-  +

+  +

+- Sample screenshot of the Quicksight dashboard, see [https://quicksight-security-dashboard.workshop.aws](quicksight-security-dashboard.workshop.aws/):

+

+

+

+- Sample screenshot of the Quicksight dashboard, see [https://quicksight-security-dashboard.workshop.aws](quicksight-security-dashboard.workshop.aws/):

+

+  - Sample screenshot of the junit-xml output in CodeBuild `-M junit-xml`:

-

- Sample screenshot of the junit-xml output in CodeBuild `-M junit-xml`:

-  +

+  ### Save your reports

@@ -323,7 +342,7 @@ Usig the same for loop it can be scanned a list of accounts with a variable like

### GovCloud

Prowler runs in GovCloud regions as well. To make sure it points to the right API endpoint use `-r` to either `us-gov-west-1` or `us-gov-east-1`. If not filter region is used it will look for resources in both GovCloud regions by default:

-```

+```sh

./prowler -r us-gov-west-1

```

> For Security Hub integration see below in Security Hub section.

@@ -334,9 +353,12 @@ Flag `-x /my/own/checks` will include any check in that particular directory. To

### Show or log only FAILs

-In order to remove noise and get only FAIL findings there is a `-q` flag that makes Prowler to show and log only FAILs. It can be combined with any other option.

+In order to remove noise and get only FAIL findings there is a `-q` flag that makes Prowler to show and log only FAILs.

+It can be combined with any other option.

+Will show WARNINGS when a resource is excluded, just to take into consideration.

```sh

+# -q option combined with -M csv -b

./prowler -q -M csv -b

```

@@ -503,7 +525,7 @@ The `aws iam create-access-key` command will output the secret access key and th

## Extras

-We are adding additional checks to improve the information gather from each account, these checks are out of the scope of the CIS benchmark for AWS but we consider them very helpful to get to know each AWS account set up and find issues on it.

+We are adding additional checks to improve the information gather from each account, these checks are out of the scope of the CIS benchmark for AWS, but we consider them very helpful to get to know each AWS account set up and find issues on it.

Some of these checks look for publicly facing resources may not actually be fully public due to other layered controls like S3 Bucket Policies, Security Groups or Network ACLs.

@@ -558,6 +580,18 @@ The `gdpr` group of checks uses existing and extra checks. To get a GDPR report,

./prowler -g gdpr

```

+## AWS FTR Checks

+

+With this group of checks, Prowler shows result of checks related to the AWS Foundational Technical Review, more information [here](https://apn-checklists.s3.amazonaws.com/foundational/partner-hosted/partner-hosted/CVLHEC5X7.html). The list of checks can be seen in the group file at:

+

+[groups/group25_ftr](groups/group25_ftr)

+

+The `ftr` group of checks uses existing and extra checks. To get a AWS FTR report, run this command:

+

+```sh

+./prowler -g ftr

+```

+

## HIPAA Checks

With this group of checks, Prowler shows results of controls related to the "Security Rule" of the Health Insurance Portability and Accountability Act aka [HIPAA](https://www.hhs.gov/hipaa/for-professionals/security/index.html) as defined in [45 CFR Subpart C - Security Standards for the Protection of Electronic Protected Health Information](https://www.law.cornell.edu/cfr/text/45/part-164/subpart-C) within [PART 160 - GENERAL ADMINISTRATIVE REQUIREMENTS](https://www.law.cornell.edu/cfr/text/45/part-160) and [Subpart A](https://www.law.cornell.edu/cfr/text/45/part-164/subpart-A) and [Subpart C](https://www.law.cornell.edu/cfr/text/45/part-164/subpart-C) of PART 164 - SECURITY AND PRIVACY

@@ -601,7 +635,7 @@ To give it a quick shot just call:

### Scenarios

-Currently this check group supports two different scenarios:

+Currently, this check group supports two different scenarios:

1. Single account environment: no action required, the configuration is happening automatically for you.

2. Multi account environment: in case you environment has multiple trusted and known AWS accounts you maybe want to append them manually to [groups/group16_trustboundaries](groups/group16_trustboundaries) as a space separated list into `GROUP_TRUSTBOUNDARIES_TRUSTED_ACCOUNT_IDS` variable, then just run prowler.

@@ -623,7 +657,7 @@ Every circle represents one AWS account.

The dashed line represents the trust boundary, that separates trust and untrusted AWS accounts.

The arrow simply describes the direction of the trust, however the data can potentially flow in both directions.

-Single Account environment assumes that only the AWS account subject to this analysis is trusted. However there is a chance that two VPCs are existing within that one AWS account which are still trusted as a self reference.

+Single Account environment assumes that only the AWS account subject to this analysis is trusted. However, there is a chance that two VPCs are existing within that one AWS account which are still trusted as a self reference.

Multi Account environments assumes a minimum of two trusted or known accounts. For this particular example all trusted and known accounts will be tested. Therefore `GROUP_TRUSTBOUNDARIES_TRUSTED_ACCOUNT_IDS` variable in [groups/group16_trustboundaries](groups/group16_trustboundaries) should include all trusted accounts Account #A, Account #B, Account #C, and Account #D in order to finally raise Account #E and Account #F for being untrusted or unknown.

diff --git a/checklist.txt b/checklist.txt

new file mode 100644

index 00000000..83e556a2

--- /dev/null

+++ b/checklist.txt

@@ -0,0 +1,6 @@

+# You can add a comma seperated list of checks like this:

+check11,check12

+extra72 # You can also use newlines for each check

+check13 # This way allows you to add inline comments

+# Both of these can be combined if you have a standard list and want to add

+# inline comments for other checks.

\ No newline at end of file

diff --git a/checks/check11 b/checks/check11

index 6162db56..f5d6a742 100644

--- a/checks/check11

+++ b/checks/check11

@@ -14,7 +14,7 @@

CHECK_ID_check11="1.1"

CHECK_TITLE_check11="[check11] Avoid the use of the root account"

CHECK_SCORED_check11="SCORED"

-CHECK_TYPE_check11="LEVEL1"

+CHECK_CIS_LEVEL_check11="LEVEL1"

CHECK_SEVERITY_check11="High"

CHECK_ASFF_TYPE_check11="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check101="check11"

diff --git a/checks/check110 b/checks/check110

index 2e60a65e..7054ea8d 100644

--- a/checks/check110

+++ b/checks/check110

@@ -14,7 +14,7 @@

CHECK_ID_check110="1.10"

CHECK_TITLE_check110="[check110] Ensure IAM password policy prevents password reuse: 24 or greater"

CHECK_SCORED_check110="SCORED"

-CHECK_TYPE_check110="LEVEL1"

+CHECK_CIS_LEVEL_check110="LEVEL1"

CHECK_SEVERITY_check110="Medium"

CHECK_ASFF_TYPE_check110="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check110="check110"

diff --git a/checks/check111 b/checks/check111

index 1a696f0b..9fbb90a4 100644

--- a/checks/check111

+++ b/checks/check111

@@ -14,7 +14,7 @@

CHECK_ID_check111="1.11"

CHECK_TITLE_check111="[check111] Ensure IAM password policy expires passwords within 90 days or less"

CHECK_SCORED_check111="SCORED"

-CHECK_TYPE_check111="LEVEL1"

+CHECK_CIS_LEVEL_check111="LEVEL1"

CHECK_SEVERITY_check111="Medium"

CHECK_ASFF_TYPE_check111="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check111="check111"

diff --git a/checks/check112 b/checks/check112

index f2f6c422..16635494 100644

--- a/checks/check112

+++ b/checks/check112

@@ -14,7 +14,7 @@

CHECK_ID_check112="1.12"

CHECK_TITLE_check112="[check112] Ensure no root account access key exists"

CHECK_SCORED_check112="SCORED"

-CHECK_TYPE_check112="LEVEL1"

+CHECK_CIS_LEVEL_check112="LEVEL1"

CHECK_SEVERITY_check112="Critical"

CHECK_ASFF_TYPE_check112="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check112="check112"

diff --git a/checks/check113 b/checks/check113

index 657c9b0a..b98a4f51 100644

--- a/checks/check113

+++ b/checks/check113

@@ -14,7 +14,7 @@

CHECK_ID_check113="1.13"

CHECK_TITLE_check113="[check113] Ensure MFA is enabled for the root account"

CHECK_SCORED_check113="SCORED"

-CHECK_TYPE_check113="LEVEL1"

+CHECK_CIS_LEVEL_check113="LEVEL1"

CHECK_SEVERITY_check113="Critical"

CHECK_ASFF_TYPE_check113="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check113="check113"

diff --git a/checks/check114 b/checks/check114

index 7872583f..aaa9dc0b 100644

--- a/checks/check114

+++ b/checks/check114

@@ -14,7 +14,7 @@

CHECK_ID_check114="1.14"

CHECK_TITLE_check114="[check114] Ensure hardware MFA is enabled for the root account"

CHECK_SCORED_check114="SCORED"

-CHECK_TYPE_check114="LEVEL2"

+CHECK_CIS_LEVEL_check114="LEVEL2"

CHECK_SEVERITY_check114="Critical"

CHECK_ASFF_TYPE_check114="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check114="check114"

diff --git a/checks/check115 b/checks/check115

index 356ba6d7..d7c7603a 100644

--- a/checks/check115

+++ b/checks/check115

@@ -14,7 +14,7 @@

CHECK_ID_check115="1.15"

CHECK_TITLE_check115="[check115] Ensure security questions are registered in the AWS account"

CHECK_SCORED_check115="NOT_SCORED"

-CHECK_TYPE_check115="LEVEL1"

+CHECK_CIS_LEVEL_check115="LEVEL1"

CHECK_SEVERITY_check115="Medium"

CHECK_ASFF_TYPE_check115="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check115="check115"

diff --git a/checks/check116 b/checks/check116

index 18a0cbc3..0cc78432 100644

--- a/checks/check116

+++ b/checks/check116

@@ -14,7 +14,7 @@

CHECK_ID_check116="1.16"

CHECK_TITLE_check116="[check116] Ensure IAM policies are attached only to groups or roles"

CHECK_SCORED_check116="SCORED"

-CHECK_TYPE_check116="LEVEL1"

+CHECK_CIS_LEVEL_check116="LEVEL1"

CHECK_SEVERITY_check116="Low"

CHECK_ASFF_TYPE_check116="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check116="AwsIamUser"

diff --git a/checks/check117 b/checks/check117

index e9854cd0..96658c5b 100644

--- a/checks/check117

+++ b/checks/check117

@@ -14,7 +14,7 @@

CHECK_ID_check117="1.17"

CHECK_TITLE_check117="[check117] Maintain current contact details"

CHECK_SCORED_check117="NOT_SCORED"

-CHECK_TYPE_check117="LEVEL1"

+CHECK_CIS_LEVEL_check117="LEVEL1"

CHECK_SEVERITY_check117="Medium"

CHECK_ASFF_TYPE_check117="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check117="check117"

diff --git a/checks/check118 b/checks/check118

index 736bb594..f2e31c9d 100644

--- a/checks/check118

+++ b/checks/check118

@@ -14,7 +14,7 @@

CHECK_ID_check118="1.18"

CHECK_TITLE_check118="[check118] Ensure security contact information is registered"

CHECK_SCORED_check118="NOT_SCORED"

-CHECK_TYPE_check118="LEVEL1"

+CHECK_CIS_LEVEL_check118="LEVEL1"

CHECK_SEVERITY_check118="Medium"

CHECK_ASFF_TYPE_check118="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check118="check118"

diff --git a/checks/check119 b/checks/check119

index e9d148dc..c1ebe209 100644

--- a/checks/check119

+++ b/checks/check119

@@ -14,7 +14,7 @@

CHECK_ID_check119="1.19"

CHECK_TITLE_check119="[check119] Ensure IAM instance roles are used for AWS resource access from instances"

CHECK_SCORED_check119="NOT_SCORED"

-CHECK_TYPE_check119="LEVEL2"

+CHECK_CIS_LEVEL_check119="LEVEL2"

CHECK_SEVERITY_check119="Medium"

CHECK_ASFF_TYPE_check119="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check119="AwsEc2Instance"

diff --git a/checks/check12 b/checks/check12

index deca5af2..f2b5d920 100644

--- a/checks/check12

+++ b/checks/check12

@@ -14,7 +14,7 @@

CHECK_ID_check12="1.2"

CHECK_TITLE_check12="[check12] Ensure multi-factor authentication (MFA) is enabled for all IAM users that have a console password"

CHECK_SCORED_check12="SCORED"

-CHECK_TYPE_check12="LEVEL1"

+CHECK_CIS_LEVEL_check12="LEVEL1"

CHECK_SEVERITY_check12="High"

CHECK_ASFF_TYPE_check12="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check12="AwsIamUser"

diff --git a/checks/check120 b/checks/check120

index e2935a5b..3223345a 100644

--- a/checks/check120

+++ b/checks/check120

@@ -14,7 +14,7 @@

CHECK_ID_check120="1.20"

CHECK_TITLE_check120="[check120] Ensure a support role has been created to manage incidents with AWS Support"

CHECK_SCORED_check120="SCORED"

-CHECK_TYPE_check120="LEVEL1"

+CHECK_CIS_LEVEL_check120="LEVEL1"

CHECK_SEVERITY_check120="Medium"

CHECK_ASFF_TYPE_check120="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check120="AwsIamRole"

@@ -31,7 +31,7 @@ check120(){

SUPPORTPOLICYARN=$($AWSCLI iam list-policies --query "Policies[?PolicyName == 'AWSSupportAccess'].Arn" $PROFILE_OPT --region $REGION --output text)

if [[ $SUPPORTPOLICYARN ]];then

for policyarn in $SUPPORTPOLICYARN;do

- POLICYROLES=$($AWSCLI iam list-entities-for-policy --policy-arn $SUPPORTPOLICYARN $PROFILE_OPT --region $REGION --output text | awk -F$'\t' '{ print $3 }')

+ POLICYROLES=$($AWSCLI iam list-entities-for-policy --policy-arn $policyarn $PROFILE_OPT --region $REGION --output text | awk -F$'\t' '{ print $3 }')

if [[ $POLICYROLES ]];then

for name in $POLICYROLES; do

textPass "$REGION: Support Policy attached to $name" "$REGION" "$name"

diff --git a/checks/check121 b/checks/check121

index 64dd729c..140fb1a3 100644

--- a/checks/check121

+++ b/checks/check121

@@ -14,7 +14,7 @@

CHECK_ID_check121="1.21"

CHECK_TITLE_check121="[check121] Do not setup access keys during initial user setup for all IAM users that have a console password"

CHECK_SCORED_check121="NOT_SCORED"

-CHECK_TYPE_check121="LEVEL1"

+CHECK_CIS_LEVEL_check121="LEVEL1"

CHECK_SEVERITY_check121="Medium"

CHECK_ASFF_TYPE_check121="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check121="AwsIamUser"

diff --git a/checks/check122 b/checks/check122

index 70423199..81ea3e61 100644

--- a/checks/check122

+++ b/checks/check122

@@ -14,7 +14,7 @@

CHECK_ID_check122="1.22"

CHECK_TITLE_check122="[check122] Ensure IAM policies that allow full \"*:*\" administrative privileges are not created"

CHECK_SCORED_check122="SCORED"

-CHECK_TYPE_check122="LEVEL1"

+CHECK_CIS_LEVEL_check122="LEVEL1"

CHECK_SEVERITY_check122="Medium"

CHECK_ASFF_TYPE_check122="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check122="AwsIamPolicy"

diff --git a/checks/check13 b/checks/check13

index 81b2c52b..050ff84b 100644

--- a/checks/check13

+++ b/checks/check13

@@ -14,7 +14,7 @@

CHECK_ID_check13="1.3"

CHECK_TITLE_check13="[check13] Ensure credentials unused for 90 days or greater are disabled"

CHECK_SCORED_check13="SCORED"

-CHECK_TYPE_check13="LEVEL1"

+CHECK_CIS_LEVEL_check13="LEVEL1"

CHECK_SEVERITY_check13="Medium"

CHECK_ASFF_TYPE_check13="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check13="AwsIamUser"

diff --git a/checks/check14 b/checks/check14

index 0d9d1cc7..fd669860 100644

--- a/checks/check14

+++ b/checks/check14

@@ -14,7 +14,7 @@

CHECK_ID_check14="1.4"

CHECK_TITLE_check14="[check14] Ensure access keys are rotated every 90 days or less"

CHECK_SCORED_check14="SCORED"

-CHECK_TYPE_check14="LEVEL1"

+CHECK_CIS_LEVEL_check14="LEVEL1"

CHECK_SEVERITY_check14="Medium"

CHECK_ASFF_TYPE_check14="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check14="AwsIamUser"

diff --git a/checks/check15 b/checks/check15

index 079245d0..a5d0d749 100644

--- a/checks/check15

+++ b/checks/check15

@@ -14,7 +14,7 @@

CHECK_ID_check15="1.5"

CHECK_TITLE_check15="[check15] Ensure IAM password policy requires at least one uppercase letter"

CHECK_SCORED_check15="SCORED"

-CHECK_TYPE_check15="LEVEL1"

+CHECK_CIS_LEVEL_check15="LEVEL1"

CHECK_SEVERITY_check15="Medium"

CHECK_ASFF_TYPE_check15="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check105="check15"

diff --git a/checks/check16 b/checks/check16

index 719811d9..4ba1c855 100644

--- a/checks/check16

+++ b/checks/check16

@@ -14,7 +14,7 @@

CHECK_ID_check16="1.6"

CHECK_TITLE_check16="[check16] Ensure IAM password policy require at least one lowercase letter"

CHECK_SCORED_check16="SCORED"

-CHECK_TYPE_check16="LEVEL1"

+CHECK_CIS_LEVEL_check16="LEVEL1"

CHECK_SEVERITY_check16="Medium"

CHECK_ASFF_TYPE_check16="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check106="check16"

diff --git a/checks/check17 b/checks/check17

index 72fdd247..92a97039 100644

--- a/checks/check17

+++ b/checks/check17

@@ -14,7 +14,7 @@

CHECK_ID_check17="1.7"

CHECK_TITLE_check17="[check17] Ensure IAM password policy require at least one symbol"

CHECK_SCORED_check17="SCORED"

-CHECK_TYPE_check17="LEVEL1"

+CHECK_CIS_LEVEL_check17="LEVEL1"

CHECK_SEVERITY_check17="Medium"

CHECK_ASFF_TYPE_check17="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check107="check17"

diff --git a/checks/check18 b/checks/check18

index c13e101b..a4e27d24 100644

--- a/checks/check18

+++ b/checks/check18

@@ -14,7 +14,7 @@

CHECK_ID_check18="1.8"

CHECK_TITLE_check18="[check18] Ensure IAM password policy require at least one number"

CHECK_SCORED_check18="SCORED"

-CHECK_TYPE_check18="LEVEL1"

+CHECK_CIS_LEVEL_check18="LEVEL1"

CHECK_SEVERITY_check18="Medium"

CHECK_ASFF_TYPE_check18="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check108="check18"

diff --git a/checks/check19 b/checks/check19

index e8b92818..49656b59 100644

--- a/checks/check19

+++ b/checks/check19

@@ -14,7 +14,7 @@

CHECK_ID_check19="1.9"

CHECK_TITLE_check19="[check19] Ensure IAM password policy requires minimum length of 14 or greater"

CHECK_SCORED_check19="SCORED"

-CHECK_TYPE_check19="LEVEL1"

+CHECK_CIS_LEVEL_check19="LEVEL1"

CHECK_SEVERITY_check19="Medium"

CHECK_ASFF_TYPE_check19="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check109="check19"

diff --git a/checks/check21 b/checks/check21

index 0446243e..968f6c19 100644

--- a/checks/check21

+++ b/checks/check21

@@ -14,7 +14,7 @@

CHECK_ID_check21="2.1"

CHECK_TITLE_check21="[check21] Ensure CloudTrail is enabled in all regions"

CHECK_SCORED_check21="SCORED"

-CHECK_TYPE_check21="LEVEL1"

+CHECK_CIS_LEVEL_check21="LEVEL1"

CHECK_SEVERITY_check21="High"

CHECK_ASFF_TYPE_check21="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check21="AwsCloudTrailTrail"

@@ -55,11 +55,14 @@ check21(){

textPass "$regx: Trail $trail is enabled for all regions" "$regx" "$trail"

fi

fi

-

done

fi

done

if [[ $trail_count == 0 ]]; then

- textFail "$regx: No CloudTrail trails were found in the account" "$regx" "$trail"

+ if [[ $FILTERREGION ]]; then

+ textFail "$regx: No CloudTrail trails were found in the filtered region" "$regx" "$trail"

+ else

+ textFail "$regx: No CloudTrail trails were found in the account" "$regx" "$trail"

+ fi

fi

-}

+}

\ No newline at end of file

diff --git a/checks/check22 b/checks/check22

index 3ae3e775..cca1a8da 100644

--- a/checks/check22

+++ b/checks/check22

@@ -14,7 +14,7 @@

CHECK_ID_check22="2.2"

CHECK_TITLE_check22="[check22] Ensure CloudTrail log file validation is enabled"

CHECK_SCORED_check22="SCORED"

-CHECK_TYPE_check22="LEVEL2"

+CHECK_CIS_LEVEL_check22="LEVEL2"

CHECK_SEVERITY_check22="Medium"

CHECK_ASFF_TYPE_check22="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check22="AwsCloudTrailTrail"

diff --git a/checks/check23 b/checks/check23

index 56984176..48b9bf44 100644

--- a/checks/check23

+++ b/checks/check23

@@ -14,7 +14,7 @@

CHECK_ID_check23="2.3"

CHECK_TITLE_check23="[check23] Ensure the S3 bucket CloudTrail logs to is not publicly accessible"

CHECK_SCORED_check23="SCORED"

-CHECK_TYPE_check23="LEVEL1"

+CHECK_CIS_LEVEL_check23="LEVEL1"

CHECK_SEVERITY_check23="Critical"

CHECK_ASFF_TYPE_check23="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check23="AwsS3Bucket"

@@ -23,7 +23,7 @@ CHECK_ASFF_COMPLIANCE_TYPE_check23="ens-op.exp.10.aws.trail.3 ens-op.exp.10.aws.

CHECK_SERVICENAME_check23="cloudtrail"

CHECK_RISK_check23='Allowing public access to CloudTrail log content may aid an adversary in identifying weaknesses in the affected accounts use or configuration.'

CHECK_REMEDIATION_check23='Analyze Bucket policy to validate appropriate permissions. Ensure the AllUsers principal is not granted privileges. Ensure the AuthenticatedUsers principal is not granted privileges.'

-CHECK_DOC_check23='https://docs.aws.amazon.com/IAM/latest/UserGuide/reference_policies_elements_ principal.html '

+CHECK_DOC_check23='https://docs.aws.amazon.com/IAM/latest/UserGuide/reference_policies_elements_principal.html'

CHECK_CAF_EPIC_check23='Logging and Monitoring'

check23(){

diff --git a/checks/check24 b/checks/check24

index 57691f3b..68cabd67 100644

--- a/checks/check24

+++ b/checks/check24

@@ -14,7 +14,7 @@

CHECK_ID_check24="2.4"

CHECK_TITLE_check24="[check24] Ensure CloudTrail trails are integrated with CloudWatch Logs"

CHECK_SCORED_check24="SCORED"

-CHECK_TYPE_check24="LEVEL1"

+CHECK_CIS_LEVEL_check24="LEVEL1"

CHECK_SEVERITY_check24="Low"

CHECK_ASFF_TYPE_check24="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check24="AwsCloudTrailTrail"

diff --git a/checks/check25 b/checks/check25

index c853cde5..752235fc 100644

--- a/checks/check25

+++ b/checks/check25

@@ -14,7 +14,7 @@

CHECK_ID_check25="2.5"

CHECK_TITLE_check25="[check25] Ensure AWS Config is enabled in all regions"

CHECK_SCORED_check25="SCORED"

-CHECK_TYPE_check25="LEVEL1"

+CHECK_CIS_LEVEL_check25="LEVEL1"

CHECK_SEVERITY_check25="Medium"

CHECK_ASFF_TYPE_check25="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ALTERNATE_check205="check25"

diff --git a/checks/check26 b/checks/check26

index a6663a22..166fbea5 100644

--- a/checks/check26

+++ b/checks/check26

@@ -14,7 +14,7 @@

CHECK_ID_check26="2.6"

CHECK_TITLE_check26="[check26] Ensure S3 bucket access logging is enabled on the CloudTrail S3 bucket"

CHECK_SCORED_check26="SCORED"

-CHECK_TYPE_check26="LEVEL1"

+CHECK_CIS_LEVEL_check26="LEVEL1"

CHECK_SEVERITY_check26="Medium"

CHECK_ASFF_TYPE_check26="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check26="AwsS3Bucket"

diff --git a/checks/check27 b/checks/check27

index fa6a432d..c5304c74 100644

--- a/checks/check27

+++ b/checks/check27

@@ -14,7 +14,7 @@

CHECK_ID_check27="2.7"

CHECK_TITLE_check27="[check27] Ensure CloudTrail logs are encrypted at rest using KMS CMKs"

CHECK_SCORED_check27="SCORED"

-CHECK_TYPE_check27="LEVEL2"

+CHECK_CIS_LEVEL_check27="LEVEL2"

CHECK_SEVERITY_check27="Medium"

CHECK_ASFF_TYPE_check27="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check27="AwsCloudTrailTrail"

diff --git a/checks/check28 b/checks/check28

index aef746b1..1008a36d 100644

--- a/checks/check28

+++ b/checks/check28

@@ -14,7 +14,7 @@

CHECK_ID_check28="2.8"

CHECK_TITLE_check28="[check28] Ensure rotation for customer created KMS CMKs is enabled"

CHECK_SCORED_check28="SCORED"

-CHECK_TYPE_check28="LEVEL2"

+CHECK_CIS_LEVEL_check28="LEVEL2"

CHECK_SEVERITY_check28="Medium"

CHECK_ASFF_TYPE_check28="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check28="AwsKmsKey"

diff --git a/checks/check29 b/checks/check29

index b5023894..dc9f37b4 100644

--- a/checks/check29

+++ b/checks/check29

@@ -14,14 +14,14 @@

CHECK_ID_check29="2.9"

CHECK_TITLE_check29="[check29] Ensure VPC Flow Logging is Enabled in all VPCs"

CHECK_SCORED_check29="SCORED"

-CHECK_TYPE_check29="LEVEL2"

+CHECK_CIS_LEVEL_check29="LEVEL2"

CHECK_SEVERITY_check29="Medium"

CHECK_ASFF_TYPE_check29="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check29="AwsEc2Vpc"

CHECK_ALTERNATE_check209="check29"

CHECK_ASFF_COMPLIANCE_TYPE_check29="ens-op.mon.1.aws.flow.1"

CHECK_SERVICENAME_check29="vpc"

-CHECK_RISK_check29='PC Flow Logs provide visibility into network traffic that traverses the VPC and can be used to detect anomalous traffic or insight during security workflows.'

+CHECK_RISK_check29='VPC Flow Logs provide visibility into network traffic that traverses the VPC and can be used to detect anomalous traffic or insight during security workflows.'

CHECK_REMEDIATION_check29='It is recommended that VPC Flow Logs be enabled for packet "Rejects" for VPCs. '

CHECK_DOC_check29='http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/flow-logs.html '

CHECK_CAF_EPIC_check29='Logging and Monitoring'

diff --git a/checks/check31 b/checks/check31

index 4411c6fa..b568027d 100644

--- a/checks/check31

+++ b/checks/check31

@@ -39,7 +39,7 @@

CHECK_ID_check31="3.1"

CHECK_TITLE_check31="[check31] Ensure a log metric filter and alarm exist for unauthorized API calls"

CHECK_SCORED_check31="SCORED"

-CHECK_TYPE_check31="LEVEL1"

+CHECK_CIS_LEVEL_check31="LEVEL1"

CHECK_SEVERITY_check31="Medium"

CHECK_ASFF_TYPE_check31="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check31="AwsCloudTrailTrail"

diff --git a/checks/check310 b/checks/check310

index 0a2d53d9..0b303701 100644

--- a/checks/check310

+++ b/checks/check310

@@ -39,7 +39,7 @@

CHECK_ID_check310="3.10"

CHECK_TITLE_check310="[check310] Ensure a log metric filter and alarm exist for security group changes"

CHECK_SCORED_check310="SCORED"

-CHECK_TYPE_check310="LEVEL2"

+CHECK_CIS_LEVEL_check310="LEVEL2"

CHECK_SEVERITY_check310="Medium"

CHECK_ASFF_TYPE_check310="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check310="AwsCloudTrailTrail"

diff --git a/checks/check311 b/checks/check311

index fb66edb6..21f0c612 100644

--- a/checks/check311

+++ b/checks/check311

@@ -39,7 +39,7 @@

CHECK_ID_check311="3.11"

CHECK_TITLE_check311="[check311] Ensure a log metric filter and alarm exist for changes to Network Access Control Lists (NACL)"

CHECK_SCORED_check311="SCORED"

-CHECK_TYPE_check311="LEVEL2"

+CHECK_CIS_LEVEL_check311="LEVEL2"

CHECK_SEVERITY_check311="Medium"

CHECK_ASFF_TYPE_check311="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check311="AwsCloudTrailTrail"

diff --git a/checks/check312 b/checks/check312

index 1de26238..4391b8eb 100644

--- a/checks/check312

+++ b/checks/check312

@@ -39,7 +39,7 @@

CHECK_ID_check312="3.12"

CHECK_TITLE_check312="[check312] Ensure a log metric filter and alarm exist for changes to network gateways"

CHECK_SCORED_check312="SCORED"

-CHECK_TYPE_check312="LEVEL1"

+CHECK_CIS_LEVEL_check312="LEVEL1"

CHECK_SEVERITY_check312="Medium"

CHECK_ASFF_TYPE_check312="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check312="AwsCloudTrailTrail"

diff --git a/checks/check313 b/checks/check313

index 2ce23a51..abf64ad8 100644

--- a/checks/check313

+++ b/checks/check313

@@ -39,7 +39,7 @@

CHECK_ID_check313="3.13"

CHECK_TITLE_check313="[check313] Ensure a log metric filter and alarm exist for route table changes"

CHECK_SCORED_check313="SCORED"

-CHECK_TYPE_check313="LEVEL1"

+CHECK_CIS_LEVEL_check313="LEVEL1"

CHECK_SEVERITY_check313="Medium"

CHECK_ASFF_TYPE_check313="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check313="AwsCloudTrailTrail"

diff --git a/checks/check314 b/checks/check314

index a0d728bb..b7bdf533 100644

--- a/checks/check314

+++ b/checks/check314

@@ -39,7 +39,7 @@

CHECK_ID_check314="3.14"

CHECK_TITLE_check314="[check314] Ensure a log metric filter and alarm exist for VPC changes"

CHECK_SCORED_check314="SCORED"

-CHECK_TYPE_check314="LEVEL1"

+CHECK_CIS_LEVEL_check314="LEVEL1"

CHECK_SEVERITY_check314="Medium"

CHECK_ASFF_TYPE_check314="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check314="AwsCloudTrailTrail"

diff --git a/checks/check32 b/checks/check32

index b932b13a..9cc24aaa 100644

--- a/checks/check32

+++ b/checks/check32

@@ -39,7 +39,7 @@

CHECK_ID_check32="3.2"

CHECK_TITLE_check32="[check32] Ensure a log metric filter and alarm exist for Management Console sign-in without MFA"

CHECK_SCORED_check32="SCORED"

-CHECK_TYPE_check32="LEVEL1"

+CHECK_CIS_LEVEL_check32="LEVEL1"

CHECK_SEVERITY_check32="Medium"

CHECK_ASFF_TYPE_check32="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check32="AwsCloudTrailTrail"

diff --git a/checks/check33 b/checks/check33

index 1cd54328..26b94710 100644

--- a/checks/check33

+++ b/checks/check33

@@ -39,7 +39,7 @@

CHECK_ID_check33="3.3"

CHECK_TITLE_check33="[check33] Ensure a log metric filter and alarm exist for usage of root account"

CHECK_SCORED_check33="SCORED"

-CHECK_TYPE_check33="LEVEL1"

+CHECK_CIS_LEVEL_check33="LEVEL1"

CHECK_SEVERITY_check33="Medium"

CHECK_ASFF_TYPE_check33="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check33="AwsCloudTrailTrail"

diff --git a/checks/check34 b/checks/check34

index 250044e0..beb53bf1 100644

--- a/checks/check34

+++ b/checks/check34

@@ -39,7 +39,7 @@

CHECK_ID_check34="3.4"

CHECK_TITLE_check34="[check34] Ensure a log metric filter and alarm exist for IAM policy changes"

CHECK_SCORED_check34="SCORED"

-CHECK_TYPE_check34="LEVEL1"

+CHECK_CIS_LEVEL_check34="LEVEL1"

CHECK_SEVERITY_check34="Medium"

CHECK_ASFF_TYPE_check34="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check34="AwsCloudTrailTrail"

diff --git a/checks/check35 b/checks/check35

index bae1f254..089bd5ab 100644

--- a/checks/check35

+++ b/checks/check35

@@ -39,7 +39,7 @@

CHECK_ID_check35="3.5"

CHECK_TITLE_check35="[check35] Ensure a log metric filter and alarm exist for CloudTrail configuration changes"

CHECK_SCORED_check35="SCORED"

-CHECK_TYPE_check35="LEVEL1"

+CHECK_CIS_LEVEL_check35="LEVEL1"

CHECK_SEVERITY_check35="Medium"

CHECK_ASFF_TYPE_check35="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check35="AwsCloudTrailTrail"

diff --git a/checks/check36 b/checks/check36

index fc9e4c39..631ed607 100644

--- a/checks/check36

+++ b/checks/check36

@@ -39,7 +39,7 @@

CHECK_ID_check36="3.6"

CHECK_TITLE_check36="[check36] Ensure a log metric filter and alarm exist for AWS Management Console authentication failures"

CHECK_SCORED_check36="SCORED"

-CHECK_TYPE_check36="LEVEL2"

+CHECK_CIS_LEVEL_check36="LEVEL2"

CHECK_SEVERITY_check36="Medium"

CHECK_ASFF_TYPE_check36="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check36="AwsCloudTrailTrail"

diff --git a/checks/check37 b/checks/check37

index 03f593ea..d7e8668b 100644

--- a/checks/check37

+++ b/checks/check37

@@ -39,7 +39,7 @@

CHECK_ID_check37="3.7"

CHECK_TITLE_check37="[check37] Ensure a log metric filter and alarm exist for disabling or scheduled deletion of customer created KMS CMKs"

CHECK_SCORED_check37="SCORED"

-CHECK_TYPE_check37="LEVEL2"

+CHECK_CIS_LEVEL_check37="LEVEL2"

CHECK_SEVERITY_check37="Medium"

CHECK_ASFF_TYPE_check37="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check37="AwsCloudTrailTrail"

diff --git a/checks/check38 b/checks/check38

index 9d81443c..ce34e64d 100644

--- a/checks/check38

+++ b/checks/check38

@@ -39,7 +39,7 @@

CHECK_ID_check38="3.8"

CHECK_TITLE_check38="[check38] Ensure a log metric filter and alarm exist for S3 bucket policy changes"

CHECK_SCORED_check38="SCORED"

-CHECK_TYPE_check38="LEVEL1"

+CHECK_CIS_LEVEL_check38="LEVEL1"

CHECK_SEVERITY_check38="Medium"

CHECK_ASFF_TYPE_check38="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check38="AwsCloudTrailTrail"

diff --git a/checks/check39 b/checks/check39

index aabbd359..15be0316 100644

--- a/checks/check39

+++ b/checks/check39

@@ -39,7 +39,7 @@

CHECK_ID_check39="3.9"

CHECK_TITLE_check39="[check39] Ensure a log metric filter and alarm exist for AWS Config configuration changes"

CHECK_SCORED_check39="SCORED"

-CHECK_TYPE_check39="LEVEL2"

+CHECK_CIS_LEVEL_check39="LEVEL2"

CHECK_SEVERITY_check39="Medium"

CHECK_ASFF_TYPE_check39="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check39="AwsCloudTrailTrail"

diff --git a/checks/check41 b/checks/check41

index 02f0fbf5..f8af5e9b 100644

--- a/checks/check41

+++ b/checks/check41

@@ -14,7 +14,7 @@

CHECK_ID_check41="4.1"

CHECK_TITLE_check41="[check41] Ensure no security groups allow ingress from 0.0.0.0/0 or ::/0 to port 22"

CHECK_SCORED_check41="SCORED"

-CHECK_TYPE_check41="LEVEL2"

+CHECK_CIS_LEVEL_check41="LEVEL2"

CHECK_SEVERITY_check41="High"

CHECK_ASFF_TYPE_check41="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check41="AwsEc2SecurityGroup"

diff --git a/checks/check42 b/checks/check42

index a2bf70fd..cf4b3cf2 100644

--- a/checks/check42

+++ b/checks/check42

@@ -14,7 +14,7 @@

CHECK_ID_check42="4.2"

CHECK_TITLE_check42="[check42] Ensure no security groups allow ingress from 0.0.0.0/0 or ::/0 to port 3389"

CHECK_SCORED_check42="SCORED"

-CHECK_TYPE_check42="LEVEL2"

+CHECK_CIS_LEVEL_check42="LEVEL2"

CHECK_SEVERITY_check42="High"

CHECK_ASFF_TYPE_check42="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check42="AwsEc2SecurityGroup"

diff --git a/checks/check43 b/checks/check43

index 205f4eb3..c3a57e12 100644

--- a/checks/check43

+++ b/checks/check43

@@ -14,7 +14,7 @@

CHECK_ID_check43="4.3"

CHECK_TITLE_check43="[check43] Ensure the default security group of every VPC restricts all traffic"

CHECK_SCORED_check43="SCORED"

-CHECK_TYPE_check43="LEVEL2"

+CHECK_CIS_LEVEL_check43="LEVEL2"

CHECK_SEVERITY_check43="High"

CHECK_ASFF_TYPE_check43="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check43="AwsEc2SecurityGroup"

diff --git a/checks/check44 b/checks/check44

index e5328c29..e6b8aee9 100644

--- a/checks/check44

+++ b/checks/check44

@@ -14,7 +14,7 @@

CHECK_ID_check44="4.4"

CHECK_TITLE_check44="[check44] Ensure routing tables for VPC peering are \"least access\""

CHECK_SCORED_check44="NOT_SCORED"

-CHECK_TYPE_check44="LEVEL2"

+CHECK_CIS_LEVEL_check44="LEVEL2"

CHECK_SEVERITY_check44="Medium"

CHECK_ASFF_TYPE_check44="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check44="AwsEc2Vpc"

diff --git a/checks/check45 b/checks/check45

index d68fc140..c9a461e9 100644

--- a/checks/check45

+++ b/checks/check45

@@ -14,7 +14,7 @@

CHECK_ID_check45="4.5"

CHECK_TITLE_check45="[check45] Ensure no Network ACLs allow ingress from 0.0.0.0/0 to SSH port 22"

CHECK_SCORED_check45="SCORED"

-CHECK_TYPE_check45="LEVEL2"

+CHECK_CIS_LEVEL_check45="LEVEL2"

CHECK_SEVERITY_check45="High"

CHECK_ASFF_TYPE_check45="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check45="AwsEc2NetworkAcl"

diff --git a/checks/check46 b/checks/check46

index 02c2101b..72395991 100644

--- a/checks/check46

+++ b/checks/check46

@@ -14,7 +14,7 @@

CHECK_ID_check46="4.6"

CHECK_TITLE_check46="[check46] Ensure no Network ACLs allow ingress from 0.0.0.0/0 to Microsoft RDP port 3389"

CHECK_SCORED_check46="SCORED"

-CHECK_TYPE_check46="LEVEL2"

+CHECK_CIS_LEVEL_check46="LEVEL2"

CHECK_SEVERITY_check46="High"

CHECK_ASFF_TYPE_check46="Software and Configuration Checks/Industry and Regulatory Standards/CIS AWS Foundations Benchmark"

CHECK_ASFF_RESOURCE_TYPE_check46="AwsEc2NetworkAcl"

diff --git a/checks/check_extra71 b/checks/check_extra71

index 4bf1706c..ca1ecb50 100644

--- a/checks/check_extra71

+++ b/checks/check_extra71

@@ -13,7 +13,7 @@

CHECK_ID_extra71="7.1"

CHECK_TITLE_extra71="[extra71] Ensure users of groups with AdministratorAccess policy have MFA tokens enabled"

CHECK_SCORED_extra71="NOT_SCORED"

-CHECK_TYPE_extra71="EXTRA"

+CHECK_CIS_LEVEL_extra71="EXTRA"

CHECK_SEVERITY_extra71="High"

CHECK_ASFF_RESOURCE_TYPE_extra71="AwsIamUser"

CHECK_ALTERNATE_extra701="extra71"

diff --git a/checks/check_extra710 b/checks/check_extra710

index a1c10252..ffd3b035 100644

--- a/checks/check_extra710

+++ b/checks/check_extra710

@@ -13,7 +13,7 @@

CHECK_ID_extra710="7.10"

CHECK_TITLE_extra710="[extra710] Check for internet facing EC2 Instances"

CHECK_SCORED_extra710="NOT_SCORED"

-CHECK_TYPE_extra710="EXTRA"

+CHECK_CIS_LEVEL_extra710="EXTRA"

CHECK_SEVERITY_extra710="Medium"

CHECK_ASFF_RESOURCE_TYPE_extra710="AwsEc2Instance"

CHECK_ALTERNATE_check710="extra710"

diff --git a/checks/check_extra7100 b/checks/check_extra7100

index 8e2a3807..cd5d8e01 100644

--- a/checks/check_extra7100

+++ b/checks/check_extra7100

@@ -17,7 +17,7 @@

CHECK_ID_extra7100="7.100"

CHECK_TITLE_extra7100="[extra7100] Ensure that no custom IAM policies exist which allow permissive role assumption (e.g. sts:AssumeRole on *)"

CHECK_SCORED_extra7100="NOT_SCORED"

-CHECK_TYPE_extra7100="EXTRA"

+CHECK_CIS_LEVEL_extra7100="EXTRA"

CHECK_SEVERITY_extra7100="Critical"

CHECK_ASFF_RESOURCE_TYPE_extra7100="AwsIamPolicy"

CHECK_ALTERNATE_check7100="extra7100"

diff --git a/checks/check_extra7101 b/checks/check_extra7101

index e1ba8dbb..a4fd714c 100644

--- a/checks/check_extra7101

+++ b/checks/check_extra7101

@@ -14,7 +14,7 @@

CHECK_ID_extra7101="7.101"

CHECK_TITLE_extra7101="[extra7101] Check if Amazon Elasticsearch Service (ES) domains have audit logging enabled"

CHECK_SCORED_extra7101="NOT_SCORED"

-CHECK_TYPE_extra7101="EXTRA"

+CHECK_CIS_LEVEL_extra7101="EXTRA"

CHECK_SEVERITY_extra7101="Low"

CHECK_ASFF_RESOURCE_TYPE_extra7101="AwsElasticsearchDomain"

CHECK_ALTERNATE_check7101="extra7101"

diff --git a/checks/check_extra7102 b/checks/check_extra7102

index b9efcc29..f16c3908 100644

--- a/checks/check_extra7102

+++ b/checks/check_extra7102

@@ -13,7 +13,7 @@

CHECK_ID_extra7102="7.102"

CHECK_TITLE_extra7102="[extra7102] Check if any of the Elastic or Public IP are in Shodan (requires Shodan API KEY)"

CHECK_SCORED_extra7102="NOT_SCORED"

-CHECK_TYPE_extra7102="EXTRA"

+CHECK_CIS_LEVEL_extra7102="EXTRA"

CHECK_SEVERITY_extra7102="High"

CHECK_ASFF_RESOURCE_TYPE_extra7102="AwsEc2Eip"

CHECK_ALTERNATE_check7102="extra7102"

diff --git a/checks/check_extra7103 b/checks/check_extra7103

index 558a1d94..12ace203 100644

--- a/checks/check_extra7103

+++ b/checks/check_extra7103

@@ -14,7 +14,7 @@

CHECK_ID_extra7103="7.103"

CHECK_TITLE_extra7103="[extra7103] Check if Amazon SageMaker Notebook instances have root access disabled"

CHECK_SCORED_extra7103="NOT_SCORED"

-CHECK_TYPE_extra7103="EXTRA"

+CHECK_CIS_LEVEL_extra7103="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7103="AwsSageMakerNotebookInstance"

CHECK_ALTERNATE_check7103="extra7103"

CHECK_SEVERITY_extra7103="Medium"

diff --git a/checks/check_extra7104 b/checks/check_extra7104

index 00b9b065..7697ad50 100644

--- a/checks/check_extra7104

+++ b/checks/check_extra7104

@@ -14,7 +14,7 @@

CHECK_ID_extra7104="7.104"

CHECK_TITLE_extra7104="[extra7104] Check if Amazon SageMaker Notebook instances have VPC settings configured"

CHECK_SCORED_extra7104="NOT_SCORED"

-CHECK_TYPE_extra7104="EXTRA"

+CHECK_CIS_LEVEL_extra7104="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7104="AwsSageMakerNotebookInstance"

CHECK_ALTERNATE_check7104="extra7104"

CHECK_SEVERITY_extra7104="Medium"

diff --git a/checks/check_extra7105 b/checks/check_extra7105

index 1316a431..1b2d2c89 100644

--- a/checks/check_extra7105

+++ b/checks/check_extra7105

@@ -14,7 +14,7 @@

CHECK_ID_extra7105="7.105"

CHECK_TITLE_extra7105="[extra7105] Check if Amazon SageMaker Models have network isolation enabled"

CHECK_SCORED_extra7105="NOT_SCORED"

-CHECK_TYPE_extra7105="EXTRA"

+CHECK_CIS_LEVEL_extra7105="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7105="AwsSageMakerModel"

CHECK_ALTERNATE_check7105="extra7105"

CHECK_SEVERITY_extra7105="Medium"

diff --git a/checks/check_extra7106 b/checks/check_extra7106

index e49b8a50..af09f269 100644

--- a/checks/check_extra7106

+++ b/checks/check_extra7106

@@ -14,7 +14,7 @@

CHECK_ID_extra7106="7.106"

CHECK_TITLE_extra7106="[extra7106] Check if Amazon SageMaker Models have VPC settings configured"

CHECK_SCORED_extra7106="NOT_SCORED"

-CHECK_TYPE_extra7106="EXTRA"

+CHECK_CIS_LEVEL_extra7106="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7106="AwsSageMakerModel"

CHECK_ALTERNATE_check7106="extra7106"

CHECK_SEVERITY_extra7106="Medium"

diff --git a/checks/check_extra7107 b/checks/check_extra7107

index 2f8c70a6..e5536e87 100644

--- a/checks/check_extra7107

+++ b/checks/check_extra7107

@@ -14,7 +14,7 @@

CHECK_ID_extra7107="7.107"

CHECK_TITLE_extra7107="[extra7107] Check if Amazon SageMaker Training jobs have intercontainer encryption enabled"

CHECK_SCORED_extra7107="NOT_SCORED"

-CHECK_TYPE_extra7107="EXTRA"

+CHECK_CIS_LEVEL_extra7107="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7107="AwsSageMakerNotebookInstance"

CHECK_ALTERNATE_check7107="extra7107"

CHECK_SEVERITY_extra7107="Medium"

diff --git a/checks/check_extra7108 b/checks/check_extra7108

index f84f6997..2956afe8 100644

--- a/checks/check_extra7108

+++ b/checks/check_extra7108

@@ -14,7 +14,7 @@

CHECK_ID_extra7108="7.108"

CHECK_TITLE_extra7108="[extra7108] Check if Amazon SageMaker Training jobs have volume and output with KMS encryption enabled"

CHECK_SCORED_extra7108="NOT_SCORED"

-CHECK_TYPE_extra7108="EXTRA"

+CHECK_CIS_LEVEL_extra7108="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7108="AwsSageMakerNotebookInstance"

CHECK_ALTERNATE_check7108="extra7108"

CHECK_SEVERITY_extra7108="Medium"

diff --git a/checks/check_extra7109 b/checks/check_extra7109

index 80778fd2..90d036e0 100644

--- a/checks/check_extra7109

+++ b/checks/check_extra7109

@@ -14,7 +14,7 @@

CHECK_ID_extra7109="7.109"

CHECK_TITLE_extra7109="[extra7109] Check if Amazon SageMaker Training jobs have network isolation enabled"

CHECK_SCORED_extra7109="NOT_SCORED"

-CHECK_TYPE_extra7109="EXTRA"

+CHECK_CIS_LEVEL_extra7109="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7109="AwsSageMakerNotebookInstance"

CHECK_ALTERNATE_check7109="extra7109"

CHECK_SEVERITY_extra7109="Medium"

diff --git a/checks/check_extra711 b/checks/check_extra711

index 4a0b5d66..34e2947a 100644

--- a/checks/check_extra711

+++ b/checks/check_extra711

@@ -13,7 +13,7 @@

CHECK_ID_extra711="7.11"

CHECK_TITLE_extra711="[extra711] Check for Publicly Accessible Redshift Clusters"

CHECK_SCORED_extra711="NOT_SCORED"

-CHECK_TYPE_extra711="EXTRA"

+CHECK_CIS_LEVEL_extra711="EXTRA"

CHECK_SEVERITY_extra711="High"

CHECK_ASFF_RESOURCE_TYPE_extra711="AwsRedshiftCluster"

CHECK_ALTERNATE_check711="extra711"

diff --git a/checks/check_extra7110 b/checks/check_extra7110

index 5a6ebefc..448e2308 100644

--- a/checks/check_extra7110

+++ b/checks/check_extra7110

@@ -14,7 +14,7 @@

CHECK_ID_extra7110="7.110"

CHECK_TITLE_extra7110="[extra7110] Check if Amazon SageMaker Training job have VPC settings configured."

CHECK_SCORED_extra7110="NOT_SCORED"

-CHECK_TYPE_extra7110="EXTRA"

+CHECK_CIS_LEVEL_extra7110="EXTRA"

CHECK_ASFF_RESOURCE_TYPE_extra7110="AwsSageMakerNotebookInstance"